The 2017 paper “Attention is All You Need” by Vaswani et al. presents a new way to build machines that can understand and generate human language. They introduce a model called the Transformer, which is based on a technique called attention.

The Transformer model is the catalyst of the current AI explosion.

Attention works by helping the model focus on the important parts of a sentence when trying to understand or generate text. It helps the model figure out which words are related to each other and how important they are in the overall meaning of the sentence.

The Transformer model is different from previous models because it doesn’t use the common building blocks (like RNNs or CNNs) that came before it.

Instead, it relies only on attention mechanisms to process language. This new approach makes the Transformer faster and better at understanding long sentences with complicated relationships between words.

The authors also came up with a few tricks to make the Transformer work well, such as:

- Using multiple attention “heads” to focus on different aspects of the input at the same time.

- Adding information about the position of words in a sentence, since the model doesn’t have a built-in way to understand word order.

- Using techniques like layer normalization and residual connections to help the model learn more effectively.

The Transformer model turned out to be very successful and has become the basis for many new language models, like BERT and GPT, which are used for tasks like translation, summarization, and more.

This article attempts to make the original technical paper accessible for non-technical people.

Abstract

Most current models that turn one sequence of symbols into another (like translating languages) use complex designs with repeating or grid-like structures, and they have separate parts for understanding and creating output. These models work best when they also use a technique called attention to help connect the understanding and creating parts.

The authors suggest a new, simpler model called the Transformer that relies only on attention and doesn’t use any repeating or grid-like structures. This new model works better, is easier to run in parallel (making it faster), and takes less time to train.

In tests on translating English to German and English to French, the Transformer beats previous top scores by a good margin. It also works well on other tasks, like figuring out the structure of English sentences, even when there’s not much training data available.

Introduction

Recurrent neural networks, especially long short-term memory and gated recurrent networks, have been really good at handling sequences of data, like words in sentences, for tasks such as language modeling and machine translation. However, these models have a drawback: they process input one piece at a time, making it difficult to speed up the process.

Attention mechanisms, which help focus on important parts of the input, have become popular in these models, allowing them to better understand relationships between parts of the input, regardless of their positions. Usually, attention is used together with recurrent networks.

In this work, the authors propose a new model called the Transformer, which doesn’t use any recurrent structures. Instead, it relies entirely on attention mechanisms to understand the relationships between input and output. This new approach allows for faster processing since it can be run in parallel, and it achieves state-of-the-art results in translation tasks with just a fraction of the training time needed for other models.

Background

There are different types of AI models that process data in sequences, like a series of words or events. To make these models work faster, researchers created new models, like the Extended Neural GPU, ByteNet, and ConvS2S. These models use something called convolutional neural networks, which process data all at once. However, they have a harder time understanding the connection between parts of a sequence that are far apart.

The Transformer model, another AI model, can learn these connections more easily. It does this using a method called self-attention. This helps the model focus on different parts of a sequence to better understand it. This has been really useful in tasks like understanding what you read and summarizing information.

There are other models that also use attention, but they don’t care about the order of the input. These models have been successful in tasks like answering questions and understanding language. What makes the Transformer special is that it only uses self-attention to understand both input and output sequences, without needing any other techniques like recurrent networks or convolution.

Model Architecture

Most powerful models for changing one sequence of symbols into another (like translation) have two main parts: an encoder and a decoder. The encoder takes the input sequence of symbols (like words) and turns them into a continuous representation. Then, the decoder generates the output sequence one symbol at a time, using the previously generated symbols as extra input for creating the next symbol.

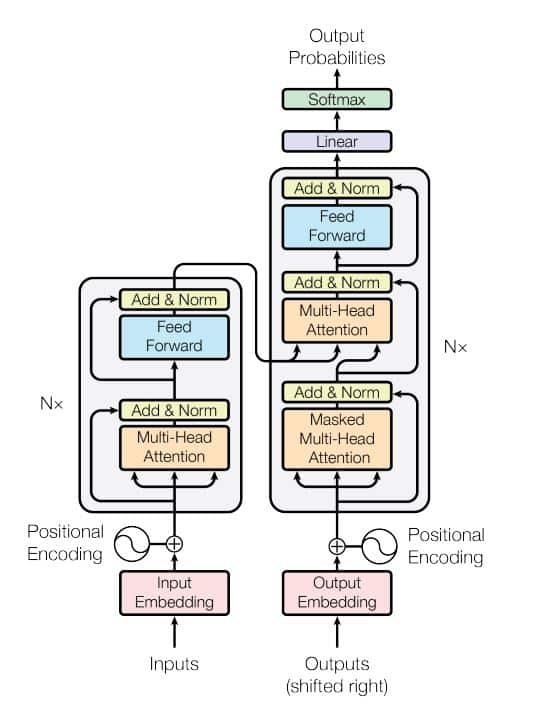

The Transformer model also has this encoder-decoder structure, but it uses self-attention (a way to focus on different parts of a single sequence) and simple, fully connected layers to build both the encoder and the decoder. You can see this in the left and right halves of the diagram in the original paper.

Encoder and Decoder Stacks

Encoder

The encoder has six identical layers stacked on top of each other. Each layer has two smaller parts. The first part focuses on different parts of the input using multi-head self-attention, and the second part is a simple network that connects each position to every other position.

There’s a shortcut connection around each of these two parts, followed by something called layer normalization. This means the output of each part is a combination of the input and the part’s own processing, which helps the model learn more effectively.

All the smaller parts in the model and the starting layers create outputs of the same size (512 dimensions) to make these shortcut connections work well.

Decoder

The decoder also has six identical layers stacked on top of each other. Unlike the encoder, which has two smaller parts in each layer, the decoder has three. The first two parts are similar to the encoder’s parts, while the third part focuses on different parts of the encoder’s output.

Just like in the encoder, there are shortcut connections around each part, followed by layer normalization. The decoder also has a special modification to its self-attention part that stops it from focusing on future positions. This, combined with the way output is created, ensures that predictions for a certain position can only depend on known outputs from earlier positions.

Attention

Scaled Dot-Product Attention

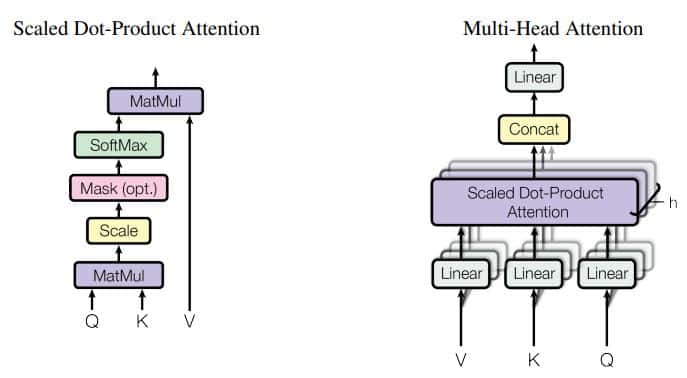

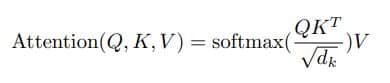

The researchers created a new way to help AI models pay attention to important information, called “Scaled Dot-Product Attention.” This method uses questions and keys of one size (dk) and values of another size (dv) as inputs. To find out how important each value is, they do some math with the questions and keys, then adjust the result with a function called softmax.

In practice, they do this with many questions at once, organizing them in tables called matrices. They also create matrices for keys and values, called K and V. They use a formula to calculate the final output matrix.

There are two common attention methods: additive attention and dot-product attention. Dot-product attention is similar to the researchers’ method, but without one specific adjustment. Additive attention uses a simpler calculation involving a network with one hidden layer. Even though they’re similar, dot-product attention is faster and uses less space because it takes advantage of special math tricks.

For smaller dk sizes, both methods are pretty much the same. But for larger dk sizes, additive attention works better without the scaling adjustment. The researchers believe this is because the math gets too big and causes the softmax function to produce very small numbers.

Multi-Head Attention

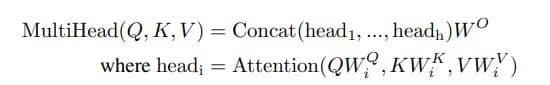

Instead of using one attention function with large keys, values, and queries, the authors found it helpful to split them into smaller parts (h times) using different learned projections. They then perform the attention function on each of these smaller versions in parallel, which produces smaller output values. These smaller outputs are combined and projected again to create the final values.

The researchers discovered that instead of using one big attention function, it’s better to break it into smaller pieces (h times) using different learned projections. They perform the attention function on each small part at the same time, which creates smaller results. These smaller results are combined and adjusted again to create the final values.

This method, called multi-head attention, allows the model to pay attention to different types of information at different positions all at once. If they used only one attention head, this wouldn’t be as effective.

In their work, the researchers use 8 attention layers (or heads) that work together. Each head is smaller, which helps keep the overall computing effort similar to using just one big attention head.

So, in short, they break the large attention function into smaller parts that work together, helping the model better understand the information while keeping the computing effort reasonable.

Applications of Attention in our Model

The Transformer uses multi-head attention in three different ways to help it understand and process information better:

- In “encoder-decoder attention” layers, the model combines information from the previous decoder layer with the encoder’s output. This helps every part of the decoder pay attention to all parts of the input sequence, which is similar to what other sequence-to-sequence models do.

- The encoder has self-attention layers, where it focuses on its own output from the previous layer. This lets each part of the encoder pay attention to all other parts of the encoder’s output.

- The decoder also has self-attention layers, allowing it to focus on its own output up to its current position. To make sure information doesn’t flow backward, they block certain connections during a specific calculation (softmax).

In short, the Transformer uses multi-head attention to help the encoder and decoder focus on important information from different positions in the input and output sequences.

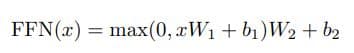

Position-wise Feed-Forward Networks

In the Transformer, besides the attention parts, there are also fully connected networks in both the encoder and decoder layers. These networks are applied to each position separately but in the same way. They consist of two steps with a special function, called ReLU, in between that returns the input value if it’s positive and 0 if it’s negative.

The formula for this network is: FFN(x) = max(0, xW1 + b1)W2 + b2

Even though the steps (linear transformations) are the same for different positions, they use different parameters for each layer. You can think of this as applying the steps in a way that affects only one position at a time.

The input and output sizes are 512 (dmodel), while the inner layer size is 2048 (dff). In simpler terms, each layer in the encoder and decoder has an additional network that helps process the information.

Embeddings and Softmax

The Transformer, like other models that process sequences, uses something called embeddings to change input and output tokens (like words) into a fixed size of numbers called vectors. The model also uses a learned transformation and a special function called softmax to predict the chances of the next tokens (words) in the output.

In the Transformer, the same set of weights is used for both input and output embeddings and the transformation before the softmax function. When these weights are used in the embedding layers, they are multiplied by the square root of a specific size (dmodel).

In simpler terms, the Transformer shares a set of weights to change tokens into vectors and to predict the next tokens in the output sequence.

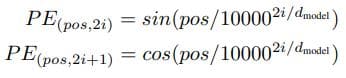

Positional Encoding

The Transformer model doesn’t use certain techniques like recurrence or convolution, so to understand the order of a sequence (like words in a sentence), it adds “positional encodings” to the input embeddings at the beginning of the encoder and decoder parts. These encodings have the same size as the embeddings, making it easy to combine them.

The Transformer uses sine and cosine functions (types of wave-like patterns) of different frequencies as positional encodings. This choice is based on the idea that these patterns would help the model understand and pay attention to the relative positions of items in the sequence.

The researchers also tried using learned positional embeddings and found that both the wave-like (sinusoidal) and learned embeddings had very similar results.

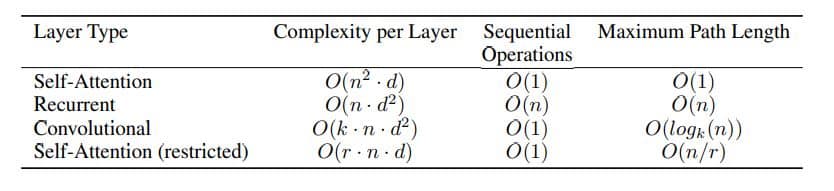

Why Self-Attention

The authors compare self-attention layers, which the Transformer uses, with other common methods like recurrent and convolutional layers for tasks involving sequences. They look at three important factors:

- Total effort needed per layer

- How much work can be done at the same time (parallelization)

- How easily the network can understand relationships between distant parts of the sequence

Self-attention layers are better than recurrent layers when it comes to parallelization and work faster when sequence length is smaller than the size of the data representation. This is often true for machine translation tasks. To make it work better for longer sequences, self-attention can be limited to nearby positions, which the authors plan to study in the future.

Convolutional layers don’t connect all input and output positions in one layer, so they need multiple layers to do this, which makes understanding relationships between positions harder. They’re usually more resource-intensive than recurrent layers but can be improved with special techniques like separable convolutions.

An extra benefit of self-attention is that it can make the model easier to understand. The attention distributions can show how the model learns the structure and meaning of sentences.

Training

Training Data and Batching

The authors trained their model on two datasets:

- WMT 2014 English-German dataset: This dataset contains about 4.5 million sentence pairs. Sentences were encoded using byte-pair encoding, which resulted in a shared source-target vocabulary of about 37,000 tokens.

- WMT 2014 English-French dataset: This dataset is larger, consisting of 36 million sentences. The tokens were split into a 32,000 word-piece vocabulary.

During training, sentence pairs were batched together based on their approximate sequence length. Each training batch contained a set of sentence pairs with around 25,000 source tokens and 25,000 target tokens.

Hardware and Schedule

The authors trained their models on a machine with 8 NVIDIA P100 GPUs. For the base models, with the hyperparameters mentioned in the paper, each training step took about 0.4 seconds. The base models were trained for 100,000 steps, which is approximately 12 hours. For the larger models, each training step took 1.0 seconds, and they were trained for 300,000 steps, which took around 3.5 days.

Optimizer

The authors used a tool called the Adam optimizer to help train the Transformer model. They set specific values for a few parameters (β1, β2, and epsilon). They also changed the learning rate (how much the model learns from new data) during training by following a formula.

This formula makes the learning rate go up gradually for the first 4000 training steps (called warmup_steps), and then it lowers the learning rate based on the step number. This approach helps the model learn more effectively as it goes through the training process.

Regularization

Residual Dropout

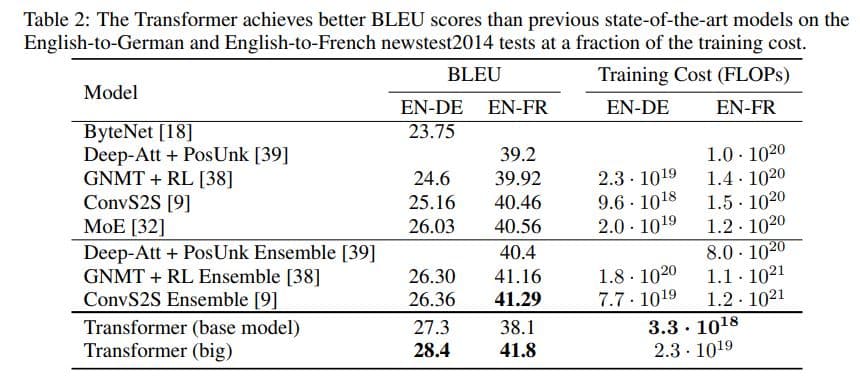

The Transformer model performs better than other top models for translating English to German and English to French. It gets higher scores using a measurement called BLEU (BiLingual Evaluation Understudy), which rates the quality of translations, and it does this with less effort in terms of FLOPs (the number of calculations needed).

For English-to-German translations, the Transformer (base model) gets a BLEU score of 27.3, and the Transformer (big) gets 28.4. Other models like ByteNet, GNMT + RL, ConvS2S, and MoE get lower scores ranging from 23.75 to 26.03.

For English-to-French translations, the Transformer (base model) gets a BLEU score of 38.1, and the Transformer (big) gets 41.8. Other models like Deep-Att + PosUnk, GNMT + RL, ConvS2S, and MoE get lower scores ranging from 39.2 to 40.56.

When looking at training cost, the Transformer models need fewer FLOPs for both translation tasks compared to other models. This shows that the Transformer is efficient in getting better results while using less computing power.

Label Smoothing

During training, the authors used a technique called “label smoothing” with a value of 0.1. This technique makes the model a bit more uncertain in its predictions. Although it increases perplexity (a measure of how confused the model is), it actually improves accuracy and the BLEU score (a measure of translation quality). In other words, label smoothing helps the model make better predictions even though it becomes slightly more unsure.

Results

Machine Translation

The Transformer model, especially the bigger version, sets new high scores (BLEU scores) for translating English to German and English to French, doing better than previous models, including groups of models working together (ensembles).

For English-to-German translation, the big Transformer model gets a BLEU score of 28.4, which is more than 2.0 BLEU higher than the best earlier models, including ensembles. The training took 3.5 days using 8 powerful graphics cards (P100 GPUs). The smaller base model also does better than all previous models and ensembles, and it needs less effort to train than any other competitive models.

For English-to-French translation, the big Transformer model gets a BLEU score of 41.0, doing better than all earlier single models and using less than 1/4 of the training effort of the previous best model. The big model has a slight change in a parameter called the dropout rate.

The authors used different techniques like checkpoint averaging, beam search, controlling output length, and stopping early when possible to get these results. They chose specific settings based on experiments with a development set.

The paper includes a table (Table 2) that compares the translation quality and training efforts of the Transformer model to other models. The number of calculations needed to train a model is estimated by considering the training time, the number of graphics cards used, and the calculation capacity of each graphics card.

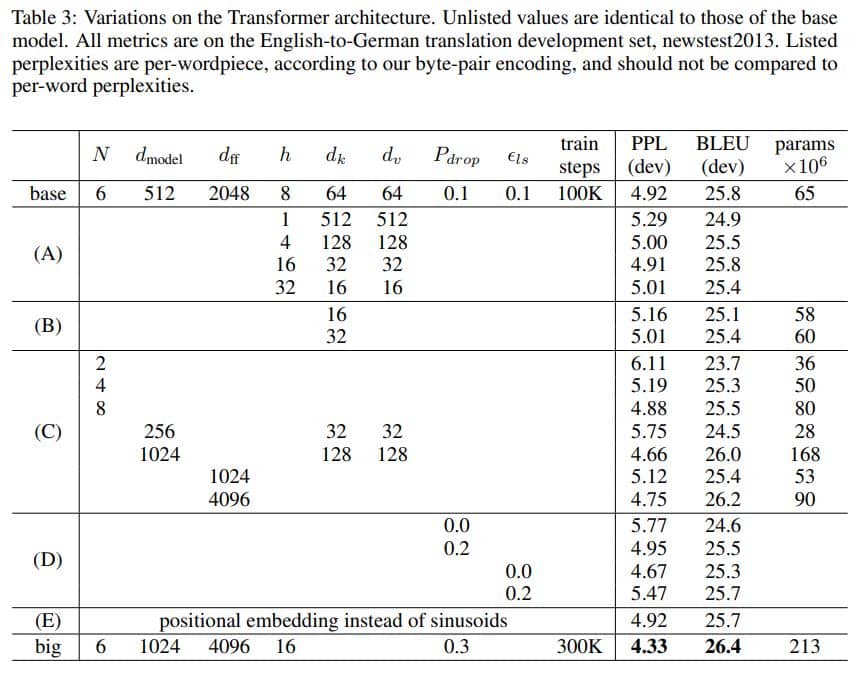

Model Variations

The authors of the Transformer model did a study to see how important different parts of the model are. They tested the changes in performance on English-to-German translation by tweaking the base model in various ways.

Some key findings from the different variations in Table 3 include:

- Changing the number of attention heads and the attention key and value dimensions (rows A):

- Using just one attention head makes the model perform 0.9 BLEU worse than the best setting.

- Having too many heads also decreases the quality.

- Reducing the attention key size dk hurts the model quality (rows B):

- This means figuring out compatibility is not easy, and a more complex compatibility function than dot product might help.

- As expected, bigger models perform better, and using dropout helps avoid overfitting (rows C and D).

- Replacing the sinusoidal positional encoding with learned positional embeddings (row E) gives results nearly the same as the base model.

These variations help understand how important different parts of the Transformer model are for its performance.

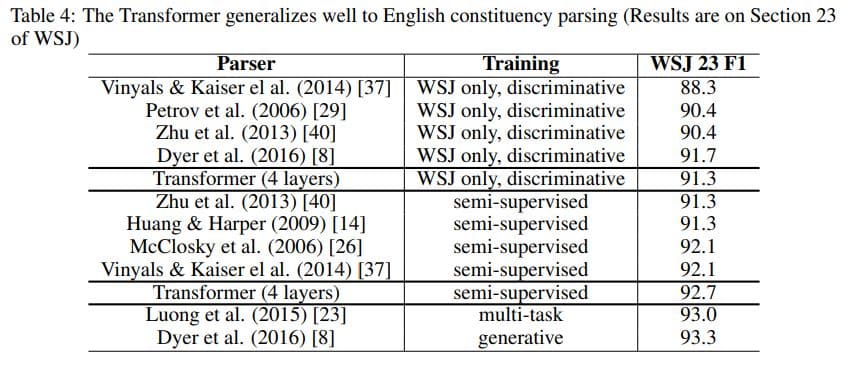

English Constituency Parsing

The authors of the Transformer model tested how well it could work on different tasks by trying it on English constituency parsing. This task is challenging because the output has strict structural rules and is usually longer than the input. Also, RNN sequence-to-sequence models haven’t done very well with limited data for this task.

They trained a 4-layer Transformer with a specific configuration (dmodel = 1024) on a dataset from the Wall Street Journal (WSJ), which had about 40,000 training sentences. They also trained the model using more data from other sources, with around 17 million sentences.

After just a few tests to adjust some settings, the Transformer model performed very well. It did better than almost all other models reported before, except the Recurrent Neural Network Grammar. The Transformer even outperformed a well-known parser when trained only on the 40,000 WSJ sentences.

These results show that the Transformer model can work well on other tasks, like English constituency parsing, without needing a lot of specific adjustments for that task.

Conclusion

The authors introduced the Transformer, a new type of model for translating sequences (like sentences) that relies entirely on attention instead of using recurrent layers, which are common in other models. The Transformer can be trained much faster than other models for translation tasks.

It achieved the best results so far on two translation tasks: English-to-German and English-to-French, even outdoing groups of previous models in the English-to-German task.

The authors are excited about using attention-based models for more tasks in the future. They plan to use the Transformer for different types of data beyond just text and to explore more efficient ways to handle large inputs and outputs like images, audio, and video. They also want to find ways to make the process of generating translations less step-by-step.

The code used to train and evaluate the Transformer models is available at https://github.com/tensorflow/tensor2tensor.

English bloke in Bangkok. First used GPT-3 in 2020 and has generated millions of words with it since. Not really much of an achievement but at least it demonstrates a smidgen of authority. Studies natural language processing, Python and Thai in his spare time.